Author: Marshall Schott

When collecting research data, it’s widely viewed as imperative that participants remain blind to the variable in question, as knowledge of what’s being tested can have a biasing effect that influences one’s responses. This is definitely the case when it comes to certain types of sensory analysis where the goal is to determine if a specific variable or set of variables has a meaningful impact. One such method is the triangle test, which involves participants receiving two identical samples and one sample that’s different, then being asked to identify the unique sample, the purpose of which is to determine if a perceptible sensory difference exists between two products.

This is the method Brülosophy adopted for the exBEERiment (xBmt) series, and in keeping with industry standards, we chose to keep all participants blind to the variables being tested. Over the 8 years and nearly 400 xBmts we’ve completed since the start of this series, a number of people have voiced their discontent with the fact we don’t inform tasters of the variable prior to administration of the triangle test. Of the numerous complaints we’ve fielded, nearly all have claimed that the potential differences caused by the variables being tested are likely so small that knowledge beforehand could help tasters better know what to focus on, which ultimately could lead to more significant findings.

Admittedly, my initial reaction to these complaints was somewhat cynical– they seemed like obvious attempts to reconcile the fact our results failed to confirm presumed brewing truths. However, as time went on, my adherence to the standard began to loosen a bit and I began to wonder what influence knowledge of the variable, or sightedness, might have on participants’ triangle test performance. To get an idea, we collected two sets of data from both blind and sighted participants over two xBmts for comparison.

| PURPOSE |

To evaluate the differences in triangle test performance between participants who are blind to the variable being tested and those who were informed of the variable prior to completion of the test.

| METHODS |

Seeing as this comparison involved two previously published xBmts, we opted to keep the brewing information limited for the sake of brevity.

Rehydration vs. Starter With Dry Yeast In A Pale Ale

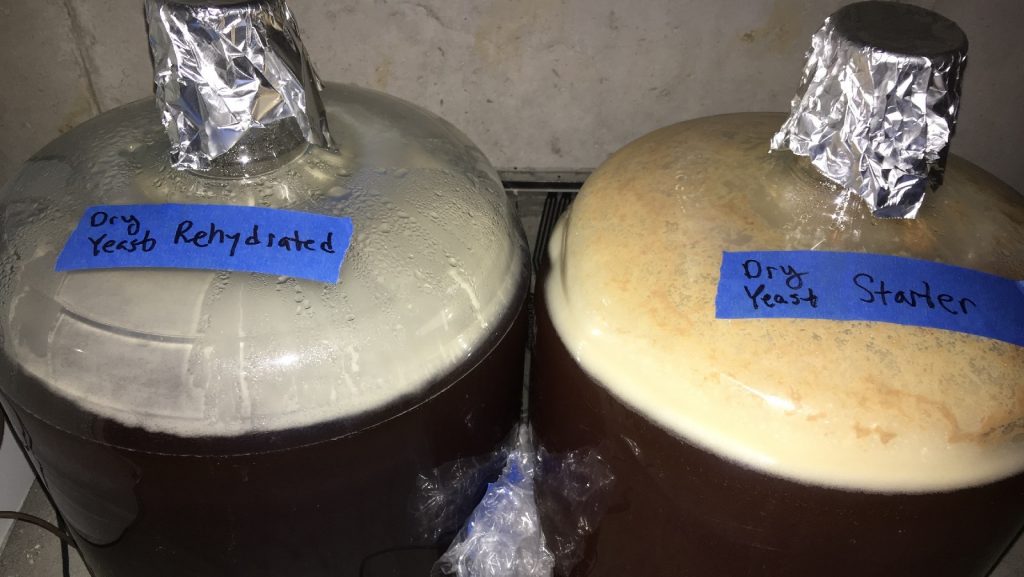

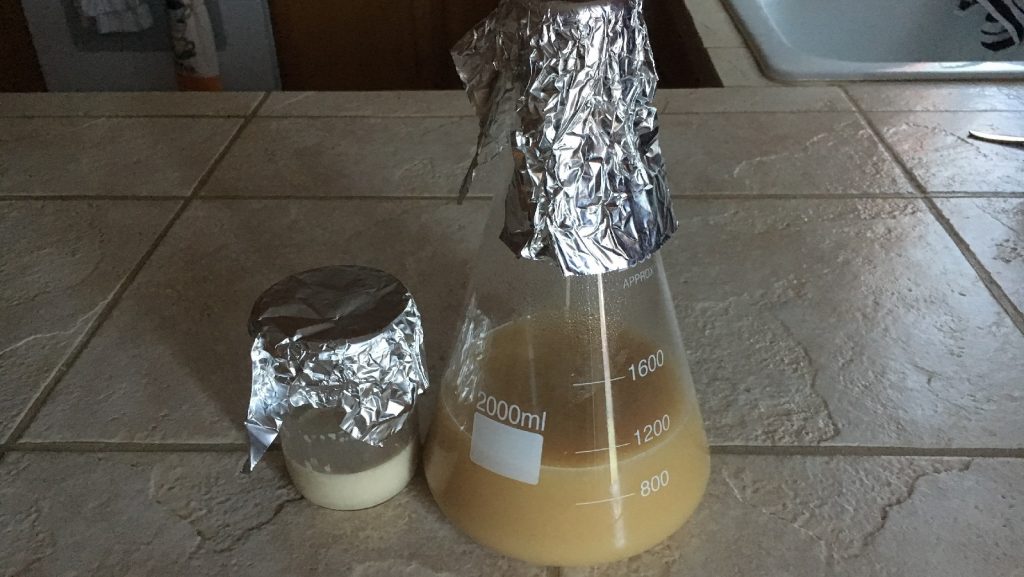

In this xBmt, we compared a Pale Ale fermented with dry yeast that was either rehydrated or propagated in a starter. Following the boil, equal volumes of wort were transferred to identical fermenters, at which point the yeasts were pitched.

The beer pitched with the starter seemed more active at 24 hours than the one pitched with rehydrated yeast.

After 1 week, the yeast starter beer appeared to be done fermented while the beer pitched with rehydrated yeast was still slightly active.

At 10 days post-pitch, hydrometer measurements showed both beers were at the same FG.

After an overnight cold crash, the beers were kegged, fined with gelatin, and burst carbonated before the gas reduced to serving pressure. After a week of conditioning, they were ready for evaluation.

Low vs. High Mash Temperature In A Munich Helles

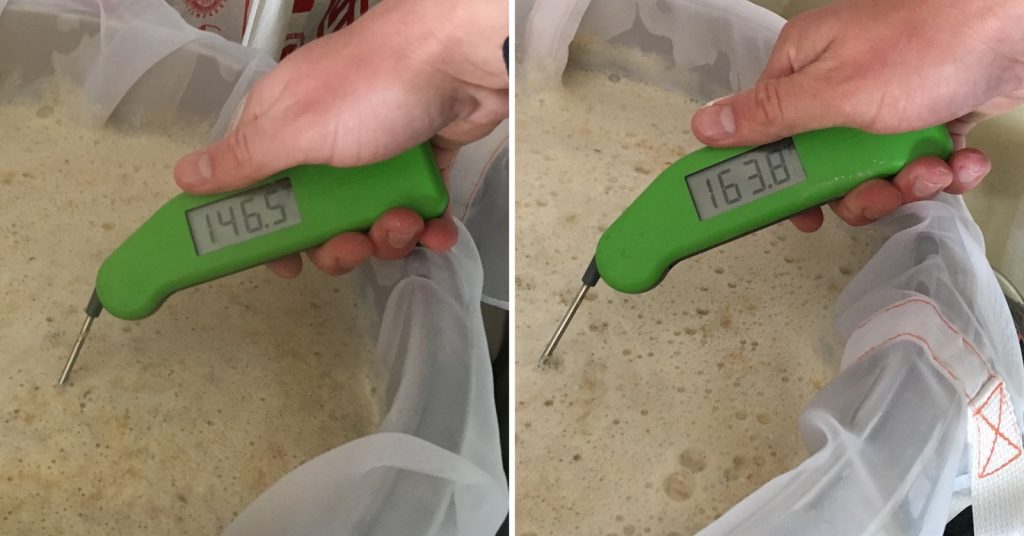

In this xBmt, we compared a Munich Helles mashed at 146°F/63°C to one mashed at 164°F/73°C.

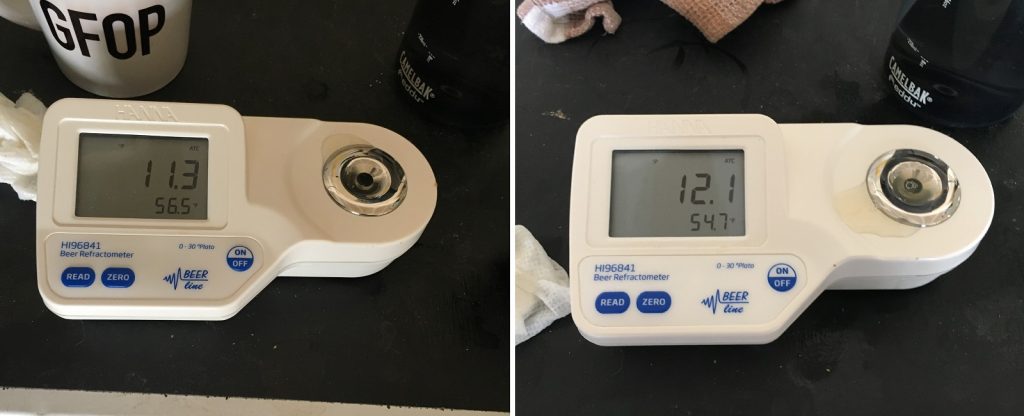

Following 60 minute mash rests, the worts were boiled before being chilled and transferred to identical fermenters. Refractometer readings revealed the low mash temperature wort was 0.004 OG points lower than the high mash temperature wort.

Once the beers had finished chilling to the target fermentation temperature of 50°F/10°C, a large starter of Imperial Yeast L13 Global was evenly split between them. Hydrometer measurements taken 2 weeks later confirmed previous findings that mash temperature has a rather drastic impact on attenuation.

The beers were transferred to CO2 purged kegs then placed in a keezer where they were left to lager on gas for 8 weeks before they were ready for evaluation.

| RESULTS |

For each set of data collected, participants with varying levels of experience were served 2 samples of the same beer and 1 sample of another beer in different colored opaque cups then asked to identify the unique sample. For each xBmt, the first set of data was collected from participants who were blind to the variable being tested; at later times, second sets of data were collected from participants who were informed of the variable as well as the purported impact said variable has on beer, the goal being to help participants focus on distinguishing differences. Ultimately, both blind and sighted tasters were unable to reliably distinguish the beers in either xBmt.

| Dry Yeast Starter xBmt | Mash Temperature xBmt | |||

| BLIND | SIGHTED | BLIND | SIGHTED | |

| Participants | 25 | 13 | 33 | 28 |

| Expected | 13 | 8 | 17 | 15 |

| Actual | 6 | 6 | 12 | 11 |

| P-Value | 0.89 | 0.24 | 0.42 | 0.31 |

| DISCUSSION |

Bias is a fascinating phenomenon that impacts every human being, whether they think so or not, and has the power to influence behavior in the most curious of ways. It’s for this reason researchers generally prefer to keep participants blind to what’s being tested, as there’s at least some chance knowledge of the variable may influence one’s response and thus render the findings meaningless. As it relates to sensory analysis, and the triangle test in particular, the argument that participant sightedness may in fact improve one’s performance by helping them to hone their focus is understandable, though the fact performance rates in two xBmts were similar between blind and sighted participants calls this claim in to question.

There are likely some who view these results not as justification for continuing with keeping participants blind, but rather as evidence that informing participants of the variable didn’t have a negative effect, so that’s how it should be done.

While I can personally relate with this sentiment, I struggle to see the added value of straying from the standard approach of maintaining participant blindness. Sure, this is just beer, we’re not trying to cure cancer, but there’s something satisfying to me about knowing our results weren’t intentionally tainted by bias. Should new information come out suggesting otherwise, I’m certainly open to change, but until then, we’ll keep on keeping on.

If you have any thoughts about this xBmt, please do not hesitate to share in the comments section below!

Support Brülosophy In Style!

All designs are available in various colors and sizes on Amazon!

Follow Brülosophy on:

FACEBOOK | TWITTER | INSTAGRAM

If you enjoy this stuff and feel compelled to support Brulosophy.com, please check out the Support page for details on how you can very easily do so. Thanks!

7 thoughts on “exBEERiment | Impact Participant Sightedness Has On Triangle Test Performance”

Again, this is like doing a political survey of 100 people and saying it represents the whole country. It takes many, probably thousands of testers, to even begin to get accurate results. But keep up the good work. It is still fun to read about the tests.

There’s a good BruLab episode that talks through the statistics of Brulosophy that is interesting. Going to be a big difference between political response where it’s common for location to have a large impact on results vs a humans ability to taste. It’s not going to ever be perfect, but statistics are weird.

I think the ‘Expected’ values of the mash temperarure xBmt in the table are flipped.

This is a super interesting experiment because it looks at the validity of the standard brulosophy experimental design. Just beer or the cure for cancer, it is still something you want to have confidence in. Interesting results! I see some ability of the blind tasters to distinguish between the two yeast forms, which completely evaporates when the blind is removed, suggesting ‘blind’ can be a factor in minimizing bias, although not statistically significant at p=095. The finding that the tasters could not distinguish between the two Helles blows me away. I would have thought that the high temp mash would have much more mouthfeel and residual sweetness on the lips. Definitely a huge difference in FG. Perhaps a topic of future exbeeriments?

Bravo. When people have their worldviews or preconceived notions challenged by data they will often go to incredible lengths to attempt to discredit the data rather than revise their thinking even a little bit. What I Iike about Brülosophy is that you just provide the data – if that confirms your worldview, great, if not, then it’s up to you what you should do with that data. The exbeeriments don’t profess a new worldview, they just provide data.

Sadly we have become so used to being fed data entirely in the context of whether it supports our ideas or not that the world has lost the critical thinking capability to question our own ideas. It’s not “IF data supports my ideas THEN support data ELSE try to discredit the data”, it should be “Hmm, that wasn’t what I expected, I wonder if I should think differently about this, or maybe conduct my own experiment and check it out for myself. ”

Keep up the good work!

I’m also impressed by the transparency of Brulosophy regarding assumptions and methods. I don’t think there is nearly enough awareness how much of the results of research being published in fields like econ and psych is done via absolute black boxes for modelling.

A lot of researchers consider their models to be proprietary intellectual property and bristle at the idea of any transparency. Want to know what their assumptions are? Forget it. Even data sets that feed their models are considered out of bounds. Want to know how representative their samples are? You just have to trust them.

To be fair, the pendulum has swung a little bit back toward transparency. But a lot of researchers are deeply conflicted in terms of their goals of monetizing their research while appearing pure, and it leads to a lot of bad results.

Well done, Marshall. Keep up the good work. I am surprised at the Helles experimental results. Perhaps the malts we use are so well modified today that mash temperature is not so important.